On 11/5/2019 at 3:20 PM, Sacaldur said:First of all, you have to think about when it's used in the entire development cycle.

First of all, you should read the repo's main README. It starts by explaining exactly that. Why this format was created, and who is its target audience.

In short, this format aims to be used in engines, it is optimized for fast load times, but also to be a distribution format (you can embed the materials and textures and it is small, therefore saves storage space and network traffic). What it is not, a designer format that can store all aspects of a modeller software, I leave that job to the modeller software's native format (after all, they were developed for that only purpose).

If we want to compare it to 2D images, then this format is not a PSD, it does not store layers, history etc.; it is rather a PNG, which can store all aspects of the resulting product (dimensions, exif data, palette, etc.) with high efficiency and compression. You won't get an undo history by opening a PNG, but you'll get the image properly and entirely, there's no missing data (unlike with existing 3d model formats).

On 11/5/2019 at 3:20 PM, Sacaldur said:either small size or (what most will prefer nowadays) fast load times

You speak of these if they were exclusive. The point is, they are not. Actually quite the contrary, a smaller file can be loaded faster from disk :-) With this Model 3D format, you can have both (that's why I invented it!).

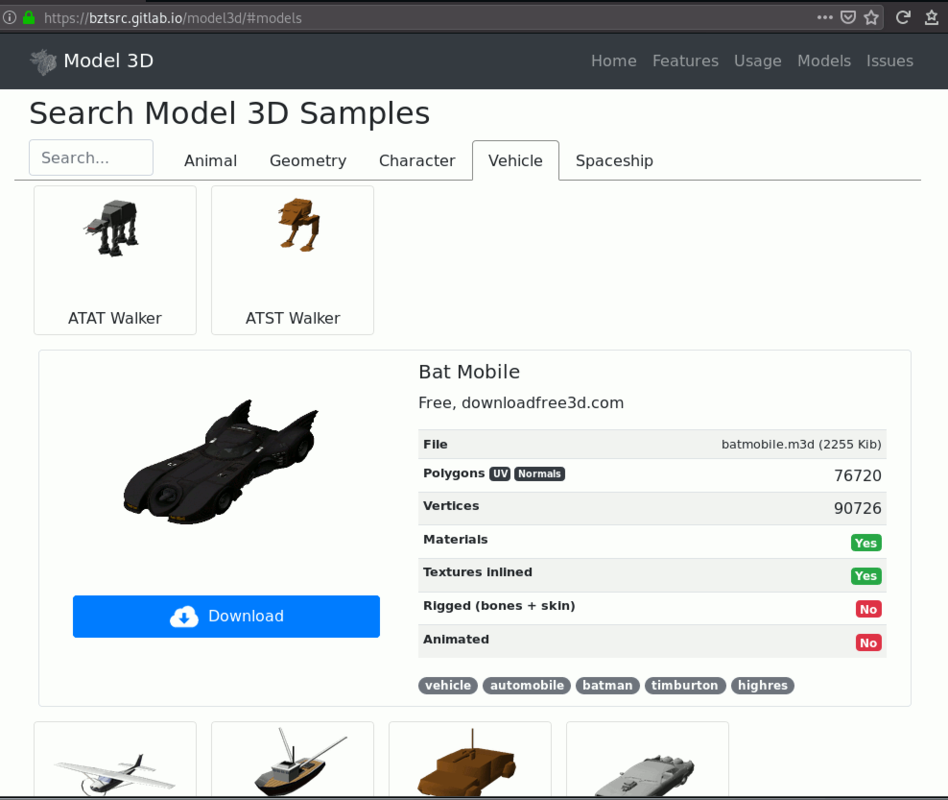

For example, I've converted a high-resolution model of Bat Mobile which I've downloaded. The original was 22 Megabytes in binary FBX (and textures were separated files), but in M3D that's only 1.6 MBytes (with textures embedded). Obviously it is faster to load and parse 1.6M than 22M. But let's put deflate aside, the plain binary M3D is only 2.4 Mbytes (with textures), 90% smaller than the binary FBX just because it has better data representation and much higher information density. And I haven't spoken about the post-process yet, the FBX variant had to be converted a lot to finally display it, while the M3D version can be sent into a VBO directly (no in-memory conversion or further parsing needed at all).

Another example, in Minetest mobs_dwarves mod, there's a Blitz3D model with animation. It is 151 K. After converting into M3D, it is only 8 K (compressed to 0.05%!!!), and all information is there, nothing is missing, you have all the materials, their names, texture references etc., and you can replay all frames exactly as they were in the original. I can't stress enough how much work I put into this format to be better than any existing format. And it is better :-D

On 11/5/2019 at 3:20 PM, Sacaldur said:only the engine developers are your target audience.

No. They are definitely targets, sure, but not the only one. This format is capable of storing all aspects of a model within a single file, because I hate that you can't download a complete model in a single file (textures are missing, mtl file is missing etc. which you can only see when you unzip). So it is also designed to be a model distribution format which does not need additional files and zip.

On 11/5/2019 at 3:20 PM, Sacaldur said:For all of these, a text format is easier to handle

That's why it also has an ASCII variant. However I'd prefer to store the modeller's native format (BLEND for example) if I were in the designer's shoes, instead of any text-based format (those are for interchanging models between software, and not for storing all software-specific options. Hell, you can't even store a bone hierarchy in OBJ. And they are SLOOOW on load).

18 hours ago, Shaarigan said:It is fast load times in nearly any case

Agreed. It is not pleasant to download 60 Mbytes, open it in a modeller software, wait a minute for the importer, just to find out that materials or texture are missing, named differently, so you can't actually use the model.

Let's not forget smaller files can be downloaded much faster (which is specially important if your game is server-based and you're sending models to the clients).

Because you haven't answered my question about the sprintf, I'll assume everything is fine with it (and you shouldn't use ASCII format in the first place :-) ).

Cheers,

bzt